by Duke Okes

The concept of ranking or rating audit results is beginning to take hold, albeit perhaps now more just from an external audit perspective. Both the medical device MDSAP program and aerospace AS 9101 standard provide a means of scoring audit findings. However, in an earlier article in The Auditor Online an idea for moving beyond the binary classification of major/minor ranking for internal audit nonconformities (NC) was presented, and a later article on LinkedIn expanded on it.

A Review of the Concept

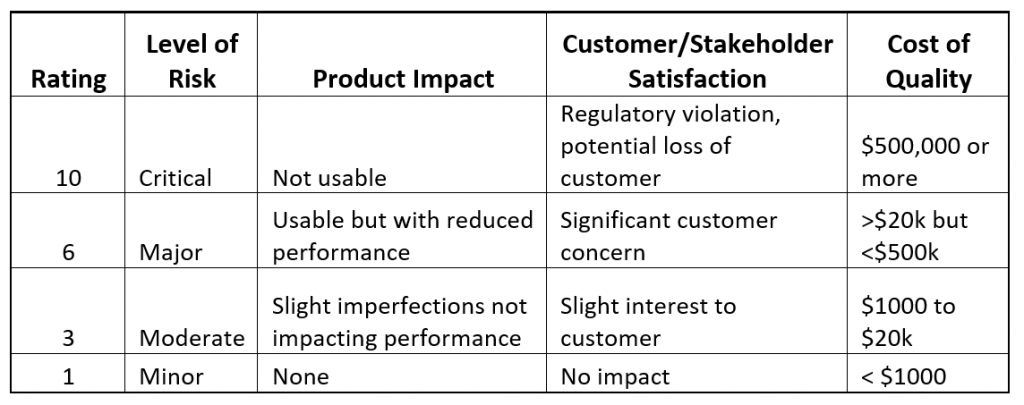

Instead of calling each NC a major or minor, each should be ranked based on the relative degree of risk. Table 1 describes impact on three primary quality objectives for each level of risk and a number has been assigned to each level (note: using a nonlinear scale such as one, three, six, and 10 helps to better differentiate between low and high levels).

Table 1 – Risk Level Descriptions

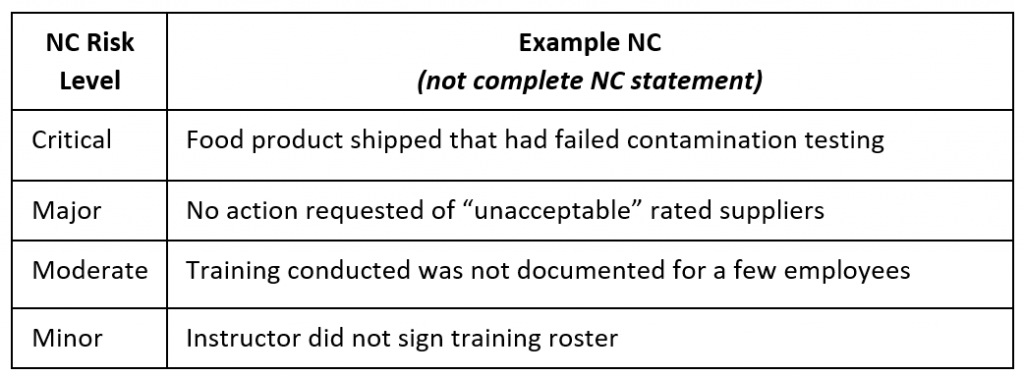

Each NC can then be evaluated to see which risk level is most appropriate. Note: Ranking can be done based on all three objectives, or a single ranking used according to the highest level of potential impact. Table 2 shows an example of NCs that might fit to each level of risk.

Table 2 – Example NCs

Aggregating Audit NC Risks

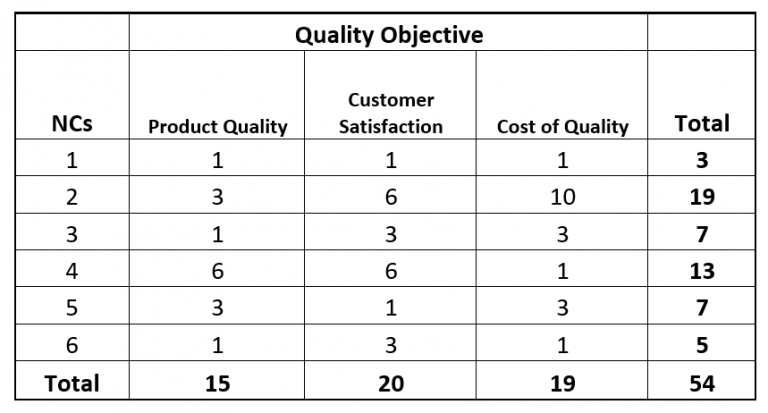

Suppose an audit has been conducted with a total of six nonconformities found. Ranking each (in this case against all three objectives) might result in Table 3.

Table 3 – Aggregate analysis of NCs from an audit

Note that analysis allows understanding not only which NCs are more important (based on the total score for that NC) and therefore the degree of effort that should be invested in corrective action. The analysis also identifies which quality objectives are most at risk based on the total score for each objective. The overall total score of 54 (and/or an average of nine per NC) could be interpreted as the total level of risk identified during this audit.

Trending the Data

Trending the number of NCs per audit is of some value, but value can be increased considerably if the level of risk is considered. While trending the number of majors versus minors is one way to do this, it will have very poor resolution since it is attribute data and will also likely be based on small sample sizes. The use of an ordinal scale such as presented above allows manipulating it as if it were variable data. Some simple examples include:

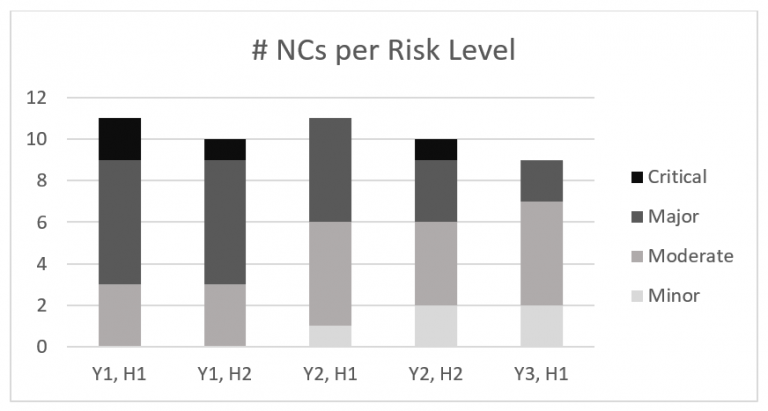

- Stacked bar charts showing the number of NCs for each level over time (see Figure 1)

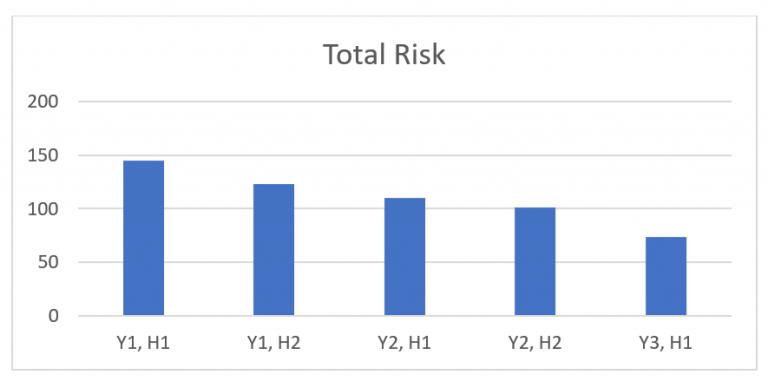

- The total or average risk level over time (see Figure 2)

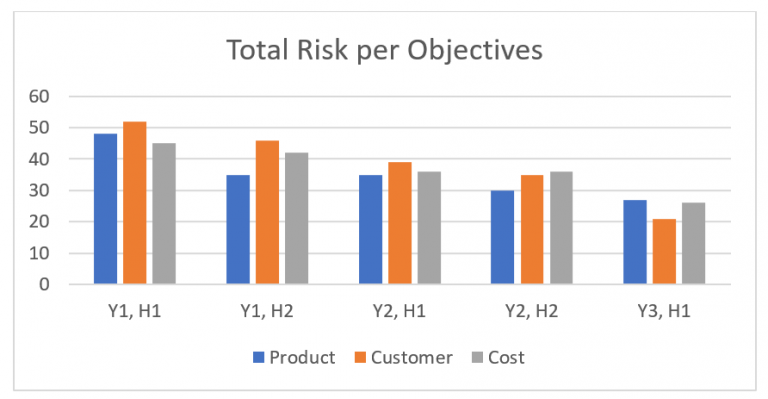

- Trends in risk level by objectives (see Figure 3)

Figure 1 – Trend of number of risks/level over time (audits conducted every six months)

Figure 2 – Trend of total risk over time

Figure 3 – Trend of total risk per objective

As these graphs indicate, while the number of NCs has only been reduced 18 percent (from 11 to nine) during this two-and-a-half year period, the total risk has decreased nearly 50 percent (from 145 to 73). While customer satisfaction was the top risk during most of the period, it is now lower than for the other objectives.

Audit scope and time frame may need to be considered, especially if the audit schedule consists of more frequent, shorter audits rather than less frequent audits of the entire system. In the former case it may be necessary to perform the trend analysis based on a consolidation of NCs from multiple audits.

Other Issues

In an organization with few NCs—which hopefully means an effective management system—other possibilities may be to include NCs from multiple management systems, such as QMS, EMS, or OHS. Although the objectives might be different, the same risk level scoring mechanism could perhaps be used. Another possibility is to combine results from multiple facilities where feasible.

Another consideration if the number of NCs is low is how well the organization is performing based on results/objectives. The concept of auditing is to evaluate the controls (the Xs) the organization uses to impact results (the Ys), and hopefully identify weaknesses before their impact is realized. However, there is always the possibility that the controls are not well aligned to results, so looking at performance objectives and discerning how the audit process might need to change might be useful.

Finally, it is important to consider the language used for risk ranking. General counsel in many organizations is likely to have a heart attack if the word “critical” is used, so make sure to clear terminology before starting such an initiative. In other words, consider the risks that might be created by the risk-ranking process.

About the author

Duke Okes has been in private practice for 34 years as a trainer, consultant, writer, and speaker on quality management topics. His book titled “Musings on Internal Quality Audits: Having a Greater Impact” was published by ASQ Quality Press in 2017. He is an ASQ Fellow and holds certifications as a CMQ/OE, CQE, and CQA.

Thanks for the useful article. I often see clients confused about how to best collect and analyze audit data (besides just counting the number of NC findings.) You provide clear examples for quantifying audit findings in a useful way that can help organizations track and take action.